Creating “Orbit, a Scalable Laptop Composition”

Orbit: A Scalable Laptop Composition is an exploration of digital music technology and laptop performance formats. The outcome includes the use of live coding, do-it-yourself (DIY) digital instruments, graphical user interfaces (GUI) and gestural controllers in a composition for the performance formats of a solo performer, laptop orchestra and a geographically dispersed, networked laptop ensemble. The Cybernetic Orchestra, the laptop orchestra at McMaster University (Ogborn 2012a), has been the key creation and research resource. The overall process has been guided by Maurice Merleau-Ponty’s phenomenology and Bruno Latour’s notion of the nonmodern, both providing perspectives regarding “the body” in relation to technology.

The overarching research methodology has been action research, a cyclical process of planning goals, acting out this plan and reflecting on the results so as to inform the next cycle (Kemmis and McTaggart 1998). This paper follows the chronology of the research through four cycles, each resulting in the creation of one or more digital instruments and, in three of the four cycles, an accompanying composition for laptop performance. The structure of the laptop orchestra (Trueman 2007; Smallwood, Trueman, Cook and Wang 2008) has provided the technical foundation and creative resources. The performance traditions and techniques of live coding (Collins, Mclean, Rohrhuber and Ward 2003; Magnusson 2011; Wang 2008), digital instruments and gestural control (Drummond 2009; Fiebrink, Wang and Cook 2007; Magnusson 2010; Paine 2009a and 2009b) and networked performance (Fields 2012; Kapur, Wang, Davidson and Cook 2005; Schroeder and Rebelo 2009) are core features of the project.

Points of Reference

The Cybernetic Orchestra (Ogborn 2012a), the laptop orchestra at McMaster University, emphasizes participatory culture, allowing for “relatively low barriers to artistic expression and civic engagement, strong support for creating and sharing one’s creations and some type of informal mentorship where what is known by the most experienced is passed along to the novices.” (Jenkins 2006). Orbit was created with a conscious effort to allow for a “low entry fee with no ceiling on virtuosity” (Wessel and Wright 2002) such that each component of the piece, including building and performing with the instruments, would be accessible to the novice, as well as provide a context for the more experienced to implement and share their knowledge. 1 (#1)[1. For more on the topic, see David Ogborn’s article in this issue of eContact!, Scalable, Collective Traditions of Electronic Sound Performance: A Progress Report (https://econtact.ca/15_2/ogborn_cybernetic.html).]

Merleau-Ponty’s phenomenology situates the body as the central vessel for each individual’s understanding — the embodied experience. The body is not separate from the world within which it exists, rather, it is the means by which one experiences and has the world revealed to them. This position puts the subjective experience of “the body” at the forefront of one’s understanding, an understanding that stems from one’s intentional actions. According to Merleau-Ponty, “[t]o understand is to experience the harmony between what we aim at and what is given, between the intention and the performance — and the body is our anchorage in a world” (Merleau-Ponty 1962, 167). Merleau-Ponty describes a blind man’s use of a stick as a means of perception beyond his physical body, “the stick is no longer an object perceived by the blind man, but an instrument with which he perceives. It is a bodily auxiliary, an extension of the bodily synthesis” (Ibid., 176). Composition and performance with digital technology calls into question this notion of a bodily extension, “an extension of the bodily synthesis.”

Latour acknowledges the existence of human-technological “hybrids” through his notion of the nonmodern, part of his attempt to “re-establish symmetry between the two branches of government, that of things — called science and technology — and that of human beings” (Latour 1991, 138). According to Latour, Modernism denotes two distinct, contradictory practices: that of translation and that of purification. Together, they simultaneously create and deny the existence of “hybrids,” mixtures of nature with culture and human with nonhuman. The act of translation is responsible for the proliferation of “hybrids”, while the act of purification is responsible for denying their existence. The nonmodern represents the opposite position, that the pure states of human and non-human are not all encompassing and that “hybrids… are just about everything” (Ibid., 47).

Phenomenology and the nonmodern are particularity pertinent to composing and performing with digital music technology, as they directly relate to the (dis)embodied experience (Corness 2008; Magnusson 2009; Schroeder and Rebelo 2009) and the disintegrating distinction between the creative roles of the composer, performer, sound designer, instrument designer and systems designer (Cascone 2003; Collins, Mclean, Rohrhuber and Ward 2003; Drummond 2009). The use of technology in musical contexts creates a fracture between the human gesture and a resulting sound morphology (Paine 2009a), such that mapping gestural parameter data to sonic parameters has become, arguably, “the most integral feature of new digital musical instruments” (Magnusson 2010). It is precisely this gap that the composer must attend to in order to create an embodied performing experience between the performer and the instrument.

Cycle One: Thunder Drone

In the first cycle of the research, the goal was to create a real-time granulation instrument in Max/MSP for the collaborative work Beautiful Chaos, a piece for a dancer with Wiimotes and laptop orchestra. This instrument became known as Thunder Drone, having two main functions: the granulation and time stretching of an audio file (a recording of thunder), and the creation of a rhythmic structure by enveloping the processed signal.

Two challenges arose during the creation of Thunder Drone. The first was building a specific instrument to be used for performance. Magnusson describes the creation of new digital instruments as a process of “designing constraints” (Magnusson 2010). Audio programs can be split into two categories: restrictive programs such as Logic, Reason, Pro Tools and Cubase, and non-restrictive programs such as SuperCollider, Max/MSP and ChucK (Ibid.). The use of non-restrictive programs is common in the creation of digital instruments due to the flexibility they provide, yet, due to this flexibility, one often attempts to create an instrument that can accomplish as much as possible, to a fault. “Designing constraints” became a useful mode of thinking, for in the early stages of Thunder Drone, many options were implemented to make the instrument as general as possible. Rethinking the process as designing constraints, Thunder Drone was stripped of all “unnecessary” components such that the performers could focus on the relevant functionalities.

The second challenge was the lack of control of the available parameters. Once familiarity is gained with the general functioning of Thunder Drone, there is a natural desire to want to control multiple parameters at once. This was partially accommodated by the use of the two-dimensional fader and still resulted in a restrictive performance, as it requires linking two parameters together, a natural constraint of the native controls of the computer.

Cycle Two: “In the Midst”

The second cycle of action research focused on live coding, with the goal of producing a live coding composition in the ChucK programming language. The result was the composition In the Midst. This piece reflects the general practices of the Cybernetic Orchestra in relation to the principles of participatory culture and the notion of a “low entry fee”.

The composition In the Midst has a simple form, consisting of three sections that explore four pre-given shreds of code: a rhythmic, melodic, harmonic and drone shred. The melodic, harmonic and rhythmic shreds are designed for live coding by providing a simple code structure that allows the user to modify basic parameters, while also leaving open the possibility to modify the code to more complex structures based on the user’s level of experience. The drone shred generates a simple GUI that provides three faders for the control of its parameters, with a fourth visualizing the duration before the shred is removed.

Two main challenges arose during the creation of In the Midst. The first was creating an instrument / shred that was engaging for the performer. Composing in this context is not simply the manifestation of sonic imagination, or the exploration of material — it also needs to consider process and physical activity. The challenge is not to reduce the performers to anthropomorphic extensions of otherwise practical and efficient algorithmic and automated processes. There are various methods for creating performer engagement. Scott Smallwood’s piece On the Floor incorporates a game such that the performers are “playing a simple slot machine,” and the sounds produced are “simply a by-product of each person’s game play” (Smallwood, Trueman, Cook and Wang 2008).

Improvised live coding with a laptop orchestra (like improvisation in general) encourages a “process type” thinking, which supplements or contrasts with the “result type” thinking one finds in many other musical situations. In this context, the compositional focus shifts from the sonic result to the composition of the instruments. Richard Dudas describes this situation by noting that: “Very often when working with technology, it is the instrument that must first be composed in order to have performance and, consequently, improvisation” (Dudas 2010). Designing instruments defines the sonic landscape of a piece, while providing guidelines for the performers to improvise within creates movement and sections. Improvisation is difficult to define clearly, though it can be understood in part as a process of listening, reacting, augmenting and creating (Thom Holmes in Dudas 2010). This situation is complicated by the Western classical music tradition’s tendency to use the term “improvisation” interchangeably with “indeterminacy” and “chance music” (Welch 2010). In live coding improvisation, there is a disintegrating distinction between creative roles as both computers and humans are understood as performer / composers. The human can improvise code to algorithmically produce indeterminate sonic results over a duration of time, thus improvising the creation of the “computer as composer / performer”, while improvising code that directly creates determinate sonic results — improvising an improviser while improvising sonic results — a situation where the improvisation of the human and the indeterminate processes of the computer are truly synthesized into a single action.

Cycle Three: “I Can’t Hear Myself Think”, SuperCollider and JackTrip

The third cycle of action research focused on geographically networked performance, with the goal of creating a live coding piece in SuperCollider. The result was the composition I Can’t Hear Myself Think. Along with exploring the practicalities of a geographically distributed network laptop ensemble, the piece provided an opportunity to introduce SuperCollider to the orchestra, as well as investigate the real-time implementation of algorithmic processes to control sonic parameters.

I Can’t Hear Myself Think is composed for a minimum of four performers — the hub performer and three instrument performers who participate in a guided improvisation with one of three provided SuperCollider instruments: Rhythm Cloud, Coloured Noise and Convo Mod. The hub performer is responsible for setting up and maintaining the JackTrip network that is used to distribute audio to all other performers, including the original source material used by the three instruments.

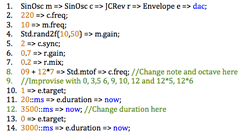

The hub performer uses a single-coil telephone pickup to capture the electromagnetic radiation from their laptop computer, which is then sent to the other performers. The performance situation and instructions are the same for each of the instrument performers. There are three available control parameters with a fixed range. The first instruction is to explore the given range of the initial parameter with the X‑dimension of their computer’s mouse (the Y‑dimension controls the amplitude). Once a particular region within the fixed range is found by the performer to be “desirable” (the parameter output is displayed in the SuperCollider “Post” window), the range is inserted into a simple algorithmic function that dynamically controls this initial parameter. The performer then starts the algorithmic function, thus automating the control of the first parameter, and follows the same instructions for the second available parameter. Once the first two parameters are automated, the performer improvises with the third parameter. The example provided is for the Rhythm Cloud instrument, which combines pitch shifting, reverberation and a low-pass filter with a combination of stochastic and deterministic impulses. Figure 5 shows the Synth definition as well as the code to control and automate the density parameter.

Two challenges were encountered during the process of creating I Can’t Hear Myself Think: the complexity of the domestic network setup and the limited bandwidth of the domestic Internet connections. To create connections from home it is necessary to login to the router, open more ports and forward them to the private IP of the performing computer. As this procedure varies depending on the type of router used, and not all performers had access to the administrative functions of their router, it became challenging to facilitate the setup over domestic Internet connections. For those who were able to access their routers, it became quite clear that the standard domestic network connections were not sufficient to handle the bandwidth needed for the performance.

Cycle Four: “Orbit”, DIY Digital Instruments and Arduino Microcontrollers

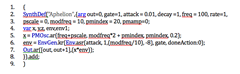

The fourth and final cycle of action research focused on do-it-yourself (DIY) digital instruments and physical computing with Arduino microcontrollers. The goal was to create a composition that incorporated elements from the previous cycles. The result was the composition Orbit, for the DIY instruments Orbital (Fig. 6), Apogee and Aphelion (Fig. 9), live coding in ChucK and the granulation / looping instrument Chronom. The DIY digital instruments are designed from easily accessible materials such that they could be built in a workshop with the members of the Cybernetic Orchestra. For the solo performance of Orbit, algorithmic control was added to the live-coding ChucK shreds Orb_Melody and Orb_Rhythm, producing Orb_RhythmAuto and Orb_MelodyAuto. The form of the composition Orbit is cyclical and accumulative and uses text chat for coordination, provided by David Ogborn’s EspGrid software (Ogborn 2012b).

The Orbital

The Orbital is designed to combine the input of an audio signal created from the physical components of the instrument with a synthesized signal manipulated by the incorporated sensors. The instrument consists of an aluminium bowl that rests on a base made of wood and cork. This allows for the manipulation of the bowl without triggering the capacitance sensor. The aluminium bowl and metal mesh lid act as capacitance sensor that reacts to external contact. Two linear hall effect sensors are attached to the bowl to detect the magnetic field produced by the spherical magnet as it moves into proximity of the sensors. A force sensor is attached to the base and is linearly responsive to the amount of pressure applied to the active area of the sensor. Figure 7 shows the basic structure of the Orbital.

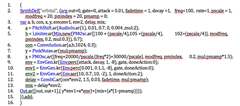

The four sensors are connected to an Arduino board sending the data via serial communication through the USB connection to Max/MSP. The signal is then scaled in Max/MSP and sent to SuperCollider. The Orbital outputs a total of five signals: the audio signal, two hall effect signals, one force signal and one capacitance signal. Figure 8 illustrates the SuperCollider code for the Orbital.

The Aphelion

The Aphelion combines a hall effect and stretch sensor with two wooden sticks and a spherical magnet. As the magnet is brought into proximity of the hall effect sensor, the sound is triggered, and as it moves away, the sound decays. A metal screw is attached to the same end as the hall effect sensor such that the magnet can be fixed, creating a continuous stream of sound that is affected by manipulating the stretch sensor. A phase modulation UGen is used to synthesize the triggered sound. Similar to the Orbital, the hall effect sensor provides data for three parameters for the phase modulation UGen. Figure 10 shows the SuperCollider code for the Aphelion.

The Apogee

The Apogee consists of a hall effect sensor and a force sensor attached to a sealed plastic bowl. The hall effect sensor is placed at the rim of the bowl with the force sensor on the bottom. The magnetic sphere is placed inside the bowl and the lid securely sealed and then the bowl is flipped upside down as in Figure 11. The magnet is manœuvred inside the bowl and sound is generated when the magnet comes into the sensory field of the hall effect sensor. Similar to both the Orbital and Aphelion, the hall effect sensor provides data to three parameters of the phase modulation UGen. The Apogee creates short percussive sounds of varying length based the time between triggered events. Figure 12 shows the SuperCollider code for the Apogee.

Chronom: The Real-time Granular Synthesis Instrument

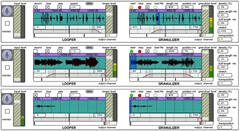

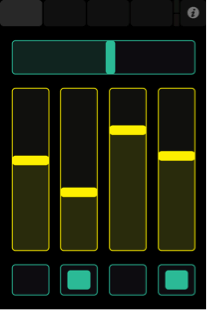

The Chronom is a live granulation and audio looping instrument. Live audio is recorded into a buffer, which is then accessible to the “granulizer” and “looper” control surfaces. The granulizer and looper are combined into a single unit, arranged horizontally and implemented three times, arranged vertically, shown in Figure 13. This allows for three different audio files to be recorded into separate buffers and manipulated during a performance.

The Chronom is set up for gestural control using an iPhone or iPad running TouchOSC. TouchOSC is an application for iPad, iPhone, iPod touch and Android smartphones that uses Open Sound Control to send and receive messages over a Wi-Fi network.

The main challenge that was encountered during the process of creating Orbit was due to the complexity added by shifting away from the built-in interaction affordances of the laptop (mouse, keyboard, etc.).

Conclusion

The four cycles of action research have been an exploration of composing and performing with digital music technology using DIY digital instruments, gestural control, GUIs and live coding for the performance formats of a solo performer, laptop orchestra and a geographically dispersed, networked laptop ensemble. Guided by phenomenology and the notion of the nonmodern, the body’s role in these contexts has been explored through the creation of digital instruments and the accompanying compositions, highlighting two issues that arise: the (dis)embodied experience and the disintegrating distinction between creative roles. The (dis)embodied experience occurs from the “fracture” between the human gesture and the sonic result. The disintegrating distinction between creative roles occur as the computer fills this gap, acting as composer, performer, sound designer, instrument designer and systems designer, at times simultaneously, thus disintegrating the distinction between them. This is particularly apparent with live coding as “[t]he algorithm can be seen as the controller or the controlled” (Collins, Mclean, Rohrhuber and Ward 2003). The hybrid nature of laptop (orchestra) performance makes it an ideal focus for future work in the phenomenological experience of human-computer relations in musical contexts, in order to further develop improvisational digital musical systems that incorporate the combined strengths and efficiencies of diverse performance modes.

Bibliography

Cascone, Kim. “Grain, Sequence, System: Three Levels of Reception in the Performance of Laptop Music.” Contemporary Music Review 22/3 (2003), pp. 101–104.

Collins, Nick, Alex Mclean, Julian Rohrhuber and Adrian Ward. “Live Coding in Laptop Performance.” Organised Sound 8/3 (December 2003), pp. 321–330.

Corness, Greg. “The Musical Experience through the Lens of Embodiment.” Leonardo Music Journal 18 (December 2008) “Why Live? — Performance in the Age of Digital Reproduction,” pp. 21–24.

Dudas, Richard. “’Comprovisation’: The Various Facets of Composed Improvisation within Interactive Performance Systems.” Leonardo Music Journal 20 (December 2010) “Improvisation,” pp. 29–31.

Drummond, Jon. “Understanding Interactive Systems.” Organised Sound 14/2 (June 2009) “Interactivity in Musical Instruments,” pp. 124–133.

Fels, Sidney. “Designing for Intimacy: Creating New Interfaces for Musical Expression.” Proceedings of the IEEE 92/4 (April 2004), pp. 672–685.

Fiebrink, Rebecca, Ge Wang and Perry R. Cook. “Don’t Forget the Laptop: Using Native Input Capabilities for Expressive Musical Control.” NIME 2007. Proceedings of the 7th International Conference on New Instruments for Musical Expression (New York: New York University, 6–10 June 2007), pp. 164–167.

Fields, Kenneth. “Syneme: Live.” Organised Sound 17/1 (April 2012) “Networked Electroacoustic Music,” pp. 86–95.

Jenkins, Henry. Confronting the Challenges of Participatory Culture: Media Education for the 21st Century. The John D. and Catherine T. MacArthur Foundation Reports on Digital Media and Learning. Chicago IL: The MacArthur Foundation, 2006.

Kapur, Ajay, Ge Wang, Philip Davidson and Perry R. Cook. “Interactive Network Performance: A Dream worth dreaming?” Organised Sound 10/3 (December 2005) “Networked Music,” pp. 209–219.

Kemmis, Stephen and Robin McTaggart (Eds.). “Chapter 3.” In The Action Research Planner. 3rd edition. Edited by Stephen Kemmis and Robin McTaggart. Victoria, Australia: Deakin University Press, 1998, pp. 47–90.

Latour, Bruno. We Have Never Been Modern. Trans. Catherine Porter. Cambridge MA: Harvard University Press, 1993.

Magnusson, Thor. “Of Epistemic Tools: Musical instruments as cognitive extensions.” Organised Sound 14/2 (June 2009) “Interactivity in Musical Instruments,” pp. 168–176.

_____. “Designing Constraints: Composing and Performing with Digital Musical Systems.” Computer Music Journal 34/4 (Winter 2010) “Human-Computer Interaction 1,” pp. 63–73.

_____. “Confessions of a Live Coder.” ICMC 2011: “innovation : interaction : imagination”. Proceedings of the 2011 International Computer Music Conference (Huddersfield, UK: Centre for Research in New Music (CeReNeM) at the University of Huddersfield, 31 July – 5 August 2011).

Merleau-Ponty, Maurice. Phenomenology of Perception. Trans. Routledge and Kegan Paul. New York: Routledge Classics, 1962.

Ogborn, David. “Composing for a Networked, Pulse Based, Laptop Orchestra.” Organised Sound 17/1 (April 2012) “Networked Electroacoustic Music,” pp. 56–61.

_____. “EspGrid: A Protocol for Participatory Electronic Ensemble Performance.” AES 2012. Engineering brief presented at the 133rd Convention of the Audio Engineering Society (San Francisco CA, USA, 26–29 October 2012).

Paine, Garth. “Gesture and Morphology in Laptop Music Performance. “ In The Oxford Handbook of Computer Music. New York: Oxford University Press, 2009, pp. 214–232.

_____. “Towards Unified Design Guidelines for New Interfaces for Musical Expression.” Organised Sound 14/2 (June 2009) “Interactivity in Musical Instruments,” pp. 142–155.

Schroeder, Franziska and Pedro Rebelo. “The Pontydian Performance: The performative layer.” Organised Sound 14/2 (June 2009) “Interactivity in Musical Instruments,” pp. 134–141.

Smallwood, Scott, Dan Trueman, Perry R. Cook and Ge Wang. “Composing for Laptop Orchestra.” Computer Music Journal 32/1 (Spring 2008) “Pattern Discovery and the Laptop Orchestra,” pp. 9–25.

Trueman, Dan. “Why a Laptop Orchestra?” Organised Sound 12/2 (July 2007) “Language,” pp. 171–179.

Wang, Ge. “The ChucK audio programming language: A strongly-timed and on the fly environ/mentality.” Unpublished PhD dissertation. Princeton University, 2008.

Welch, Chapman. “Programming Machines and People: Techniques for live improvisation with electronics.” Leonardo Music Journal 20 (December 2010) “Improvisation,” pp. 25–28.

Wessel, David and Matthew Wright. “Problems and Prospects for Intimate Music Control of Computers.” Computer Music Journal 26/3 (Fall 2002) “New Music Interfaces,” pp. 11–22.

Social top